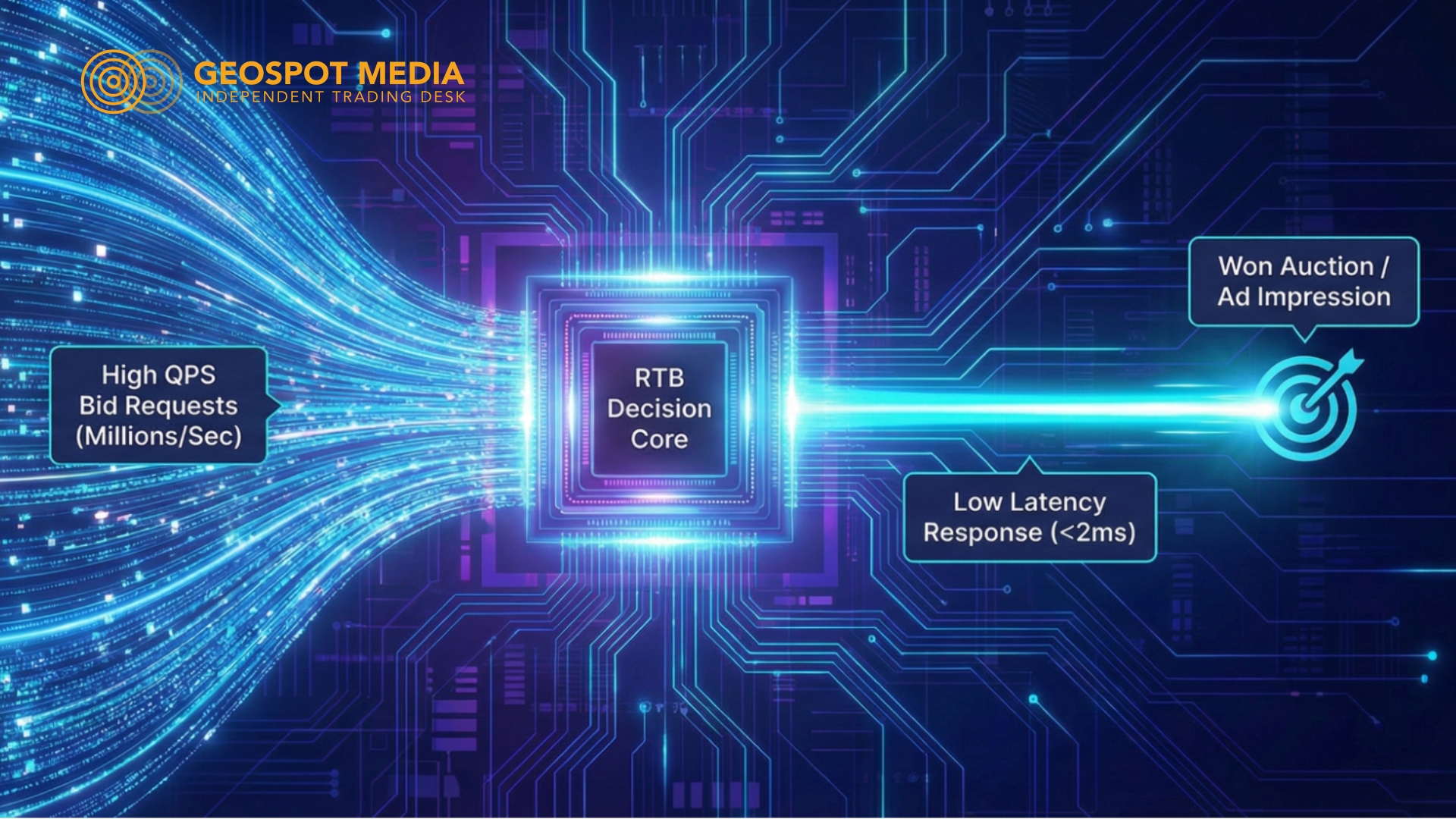

Milliseconds Matter: How We Optimized Our Bidder for 2ms Latency

In the world of Programmatic Advertising and Real-Time Bidding (RTB), speed isn’t just a metric-it’s the entire business. We operate in a strict window to receive a request, decide if we want it, calculate the price, and send a response. If we are too slow, we don’t just lose the auction; we don’t even get to compete.

At GeoSpot Media, our bidder architecture has undergone a massive evolution to meet the demands of rising QPS (Queries Per Second). Here is the story of how we moved from standard database queries to a hybrid in-memory architecture, cutting our internal processing latency by over 50%.

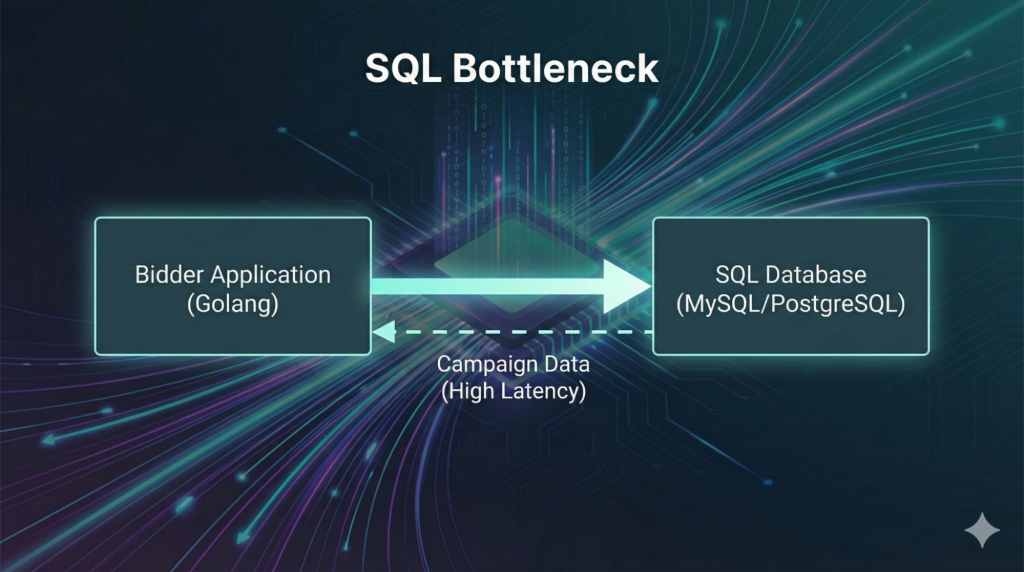

The Early Days: The SQL Bottleneck

When our bidder first launched, our traffic was modest. At that stage, our architecture was straightforward: when a bid request came in, we would query a relational database (MySQL/PostgreSQL) to fetch campaign targeting data.

It worked-until it didn’t.

Relational databases are robust, but they aren’t built for the millisecond-level distinct lookups required by high-frequency trading. As our QPS grew, the database became a choke point. The latency from establishing connections and executing complex queries meant we were spending too much of our 100ms budget just fetching data, leaving little time for the actual decisioning logic. We knew we had to change.

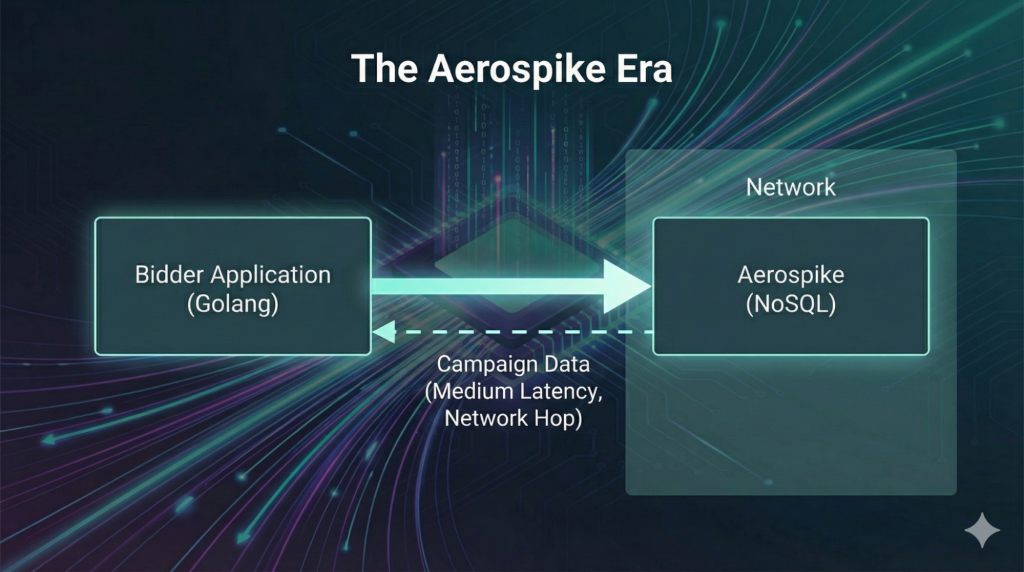

Phase 1: The Aerospike Era

To solve the latency crisis, we evaluated several tech stacks and landed on Aerospike.

Aerospike is a beast when it comes to speed and high throughput. By migrating our data to this NoSQL solution, we saw immediate results. We eliminated the overhead of SQL and reduced our internal lookup latency to a fluctuation of 4ms to 6ms.

For a while, this was sufficient. We were handling higher QPS, and the system was stable. But in engineering, “stable” is just a temporary state before the next scale-up.

The Hidden Cost of Network I/O

As we aimed to scale up further to handle massive global traffic, we noticed a new bottleneck. While 4ms is fast, it involves a network hop.

When you are processing hundreds of thousands of requests per second, even a tiny amount of network latency compounds. Every time our software had to reach out to Aerospike over the network, it introduced a wait time. At high concurrency, these tiny waits led to increased CPU usage as the system managed thousands of open connections and context switches.

We realized that to process more QPS with the same hardware, we had to stop crossing the network for every single decision.

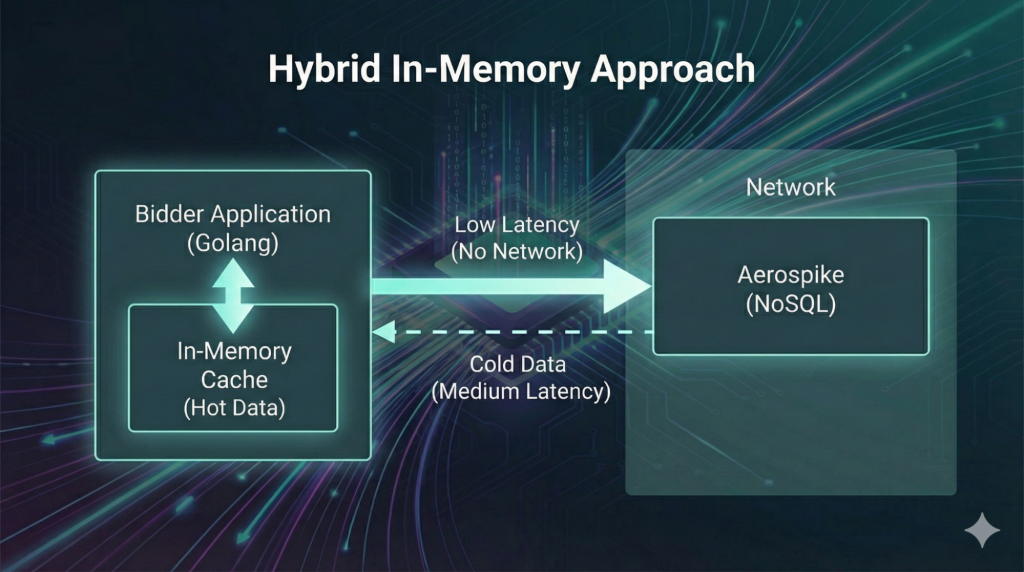

Phase 2: The Hybrid In-Memory Approach

The solution was to bring the data closer to the code.

Our bidder is written in Golang, a language designed for high concurrency and speed. We decided to leverage Go’s efficiency by moving our “hot” targeting data directly into an in-memory cache within the application itself.

This wasn’t about abandoning Aerospike; it was about optimization. Aerospike is excellent for storing vast amounts of user profile data that is too large to keep in RAM. However, campaign targeting rules-which are accessed frequently-fit perfectly in memory.

We adopted a hybrid approach:

- In-Memory Cache: Used for high-frequency targeting checks. This eliminated the network hop entirely for the majority of logic.

- Aerospike: Retained for heavy lifting, storing large datasets and user profiles that we access only when necessary.

The Result: 2ms Latency

The impact of this shift was drastic. By cutting out the network round-trip for targeting data, our average internal latency dropped from ~5ms down to 2-3ms.

Because our Golang application was no longer waiting on network I/O, our CPU efficiency skyrocketed. We could suddenly handle significantly higher QPS on the same infrastructure.

Why this matters for our clients: A faster bidder means we can evaluate more opportunities. By saving milliseconds on data retrieval, we have more time to run complex algorithms for bid shading and valuation. Ultimately, this architecture allows GeoSpot Media to process more traffic, find the perfect user, and win the auction more often.

We are never “done” optimizing, but today, our hybrid stack gives us the speed we need to compete at the highest level.

Post Comment